Information Software and the Graphical Interface

This article is best experienced directly on the Authors website here.

Abstract

#The ubiquity of frustrating, unhelpful software interfaces has motivated decades of research into “Human-Computer Interaction.” In this paper, I suggest that the long-standing focus on “interaction” may be misguided. For a majority subset of software, called “information software,” I argue that interactivity is actually a curse for users and a crutch for designers, and users’ goals can be better satisfied through other means.

#Information software design can be seen as the design of context-sensitive information graphics. I demonstrate the crucial role of information graphic design, and present three approaches to context-sensitivity, of which interactivity is the last resort. After discussing the cultural changes necessary for these design ideas to take root, I address their implementation. I outline a tool which may allow designers to create data-dependent graphics with no engineering assistance, and also outline a platform which may allow an unprecedented level of implicit context-sharing between independent programs. I conclude by asserting that the principles of information software design will become critical as technology improves.

#Although this paper presents a number of concrete design and engineering ideas, the larger intent is to introduce a “unified theory” of information software design, and provide inspiration and direction for progressive designers who suspect that the world of software isn’t as flat as they’ve been told.

Scope and terminology

#“Software,” as used here, refers to user-facing personal desktop software, whether on a native or web platform. “Software design” describes all appearance and behaviors visible to a user; it approaches software as a product. “Software engineering” implements the design on a computer; it approaches software as a technology. These are contentious definitions; hopefully, this paper itself will prove far more contentious.

Contents

What is software?

- Of software and sorcery. Is “interaction design” the cure for frustrating software, or the disease itself?

- What is software design? Software is not a new and mysterious medium, but a fusion of two old ones.

- What is software for? People turn to software to learn, to create, and to communicate.

- Manipulation software design is hard. Creating software for creating is tricky business.

- Most software is information software. People spend more time learning than creating.

Graphic design

- Information software design is graphic design. People learn by looking. Looks are all that matters.

- Demonstration: Showing the data. Redesigning Amazon as an information graphic.

- Demonstration: Arranging the data. Redesigning Yahoo! Movies as an information graphic.

Context-sensitivity

- Context-sensitive information graphics. Software trumps print by showing only what’s relevant.

- Inferring context from the environment. The outside world can suggest what’s relevant.

- Inferring context from history. Memories of the past can suggest what’s relevant.

Interactivity

- Interactivity considered harmful. The user can suggest what’s relevant, but only as a last resort.

- Reducing interaction. Approaches to easing the pain.

- How did we get here? The popular focus on interactivity is a vestige of another era.

Intermission

- Case study: Train schedules. Designing a trip planner as an information graphic.

- Demonstration: Trip planning redux. Redesigning Southwest Airlines as an information graphic.

Changing the world

- Designing the information software revolution. Five steps from artifice to art form.

- Designing a design tool. Dynamic graphics without the programming.

- Engineering inference from history. How software can learn from the past.

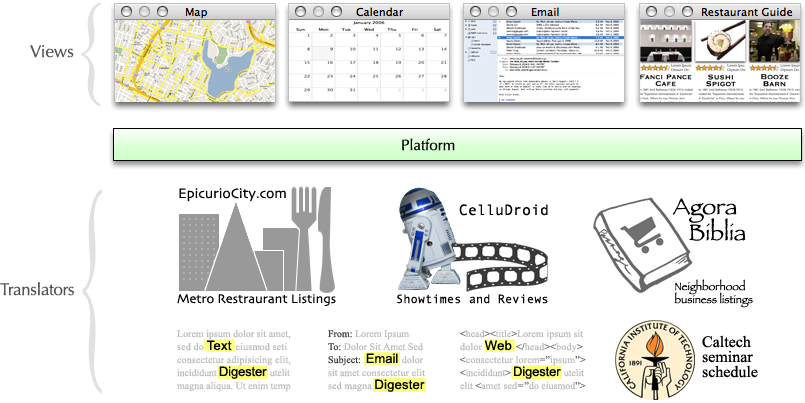

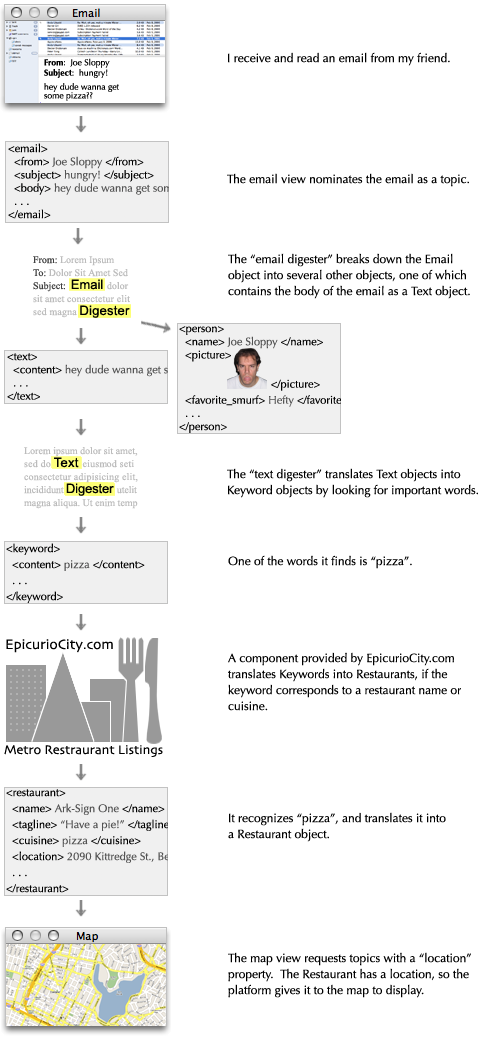

- Engineering inference from the environment. A platform for implicit communication between software.

- Information and the world of tomorrow. Why all this matters.

Of software and sorcery

#A computational process is indeed much like a sorcerer’s idea of a spirit. It cannot be seen or touched. It is not composed of matter at all. However, it is very real. It can perform intellectual work. It can answer questions. It can affect the world by disbursing money at a bank or by controlling a robot arm in a factory. The programs we use to conjure processes are like a sorcerer’s spells.

—Abelson and Sussman, Structure and Interpretation of Computer Programs (1984)

#Merlin had it easy—raising Stonehenge was a mere engineering challenge. He slung some weighty stones, to be sure, but their placement had only to please a subterranean audience whose interest in the matter was rapidly decomposing. The dead are notoriously unpicky.

#Today’s software magicians carry a burden heavier than 13-foot monoliths—communication with the living. They often approach this challenge like Geppetto’s fairy—attempting to instill the spark of life into a mechanical contraption, to create a Real Boy. Instead, their vivified creations often resemble those of Frankenstein—helpless, unhelpful, maddeningly stupid, and prone to accidental destruction.

#This is a software crisis, and it isn’t news. For decades, the usability pundits have devoted vim and vitriol to a crusade against frustrating interfaces. Reasoning that the cure for unfriendly software is to make software friendlier, they have rallied under the banner of “interaction design,” spreading the gospel of friendly, usable interactivity to all who would listen.

#Yet, software has remained frustrating, and as the importance of software to society has grown, so too has the crisis. The crusade marches on, with believers rarely questioning the sacred premise—that software must be interactive in the first place. That software is meant to be “used.”

#I suggest that the root of the software crisis is an identity crisis—an unclear understanding of what the medium actually is, and what it’s for. Perhaps the spark of life is misdirected magic.A good introduction to the crisis is Alan Cooper’s The Inmates Are Running The Asylum (1999). Essential concepts of interactive design are presented in Don Norman’s Design Of Everyday Things(2002), Jef Raskin’s The Humane Interface (2000), and Cooper’s About Face (2003).

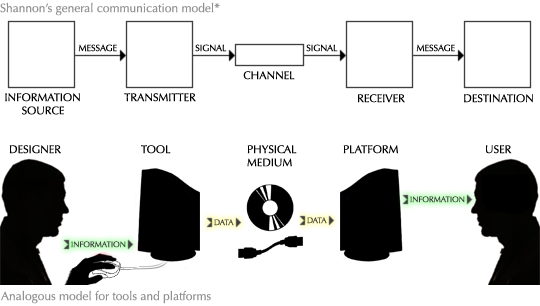

What is software design?

#A person experiences modern software almost exclusively through two channels:

- She reads and interprets pictures on a screen.

- She points and pushes at things represented on the screen, using a mouse as a proxy finger.

#Thus, software design involves the design of two types of artifact:

- Pictures.

- Things to push.

#These are not brave new realms of human endeavor. We share the blood of cavemen who pushed spears into mammoths and drew pictures of them in the living room. By now, these two activities have evolved into well-established design disciplines: graphic design and industrial design.

#Graphic design is the art of conveying a message on a two-dimensional surface. This is a broad field, because people have such a variety of messages to convey—identity, social status, emotion, persuasion, and so on. Most relevant to software is a branch that Edward Tufte calls information design—the use of pictures to express knowledge of interest to the reader.** Edward Tufte, The Visual Display of Quantitative Information (2001). Some products of conventional information graphic design include bus schedules, telephone books, newspapers, maps, and shopping catalogs. A good graphic designer understands how to arrange information on the page so the reader can ask and answer questions, make comparisons, and draw conclusions.

#When the software designer defines the visual representation of her program, when she describes the pictures that the user will interpret, she is doing graphic design, whether she realizes this or not.

#Industrial design is the art of arranging and shaping a physical product so it can be manipulated by a person. This too is a broad field, because people work with such a variety of objects—cutlery to chairs, cell phones to cars. A good industrial designer understands the capabilities and limitations of the human body in manipulating physical objects, and of the human mind in comprehending mechanical models. A camera designer, for example, shapes her product to fit the human hand. She places buttons such that they can be manipulated with index fingers while the camera rests on the thumbs, and weights the buttons so they can be easily pressed in this position, but won’t trigger on accident. Just as importantly, she designs an understandable mapping from physical features to functions—pressing a button snaps a picture, pulling a lever advances the film, opening a door reveals the film, opening another door reveals the battery.

#Although software is the archetypical non-physical product, modern software interfaces have evolved overtly mechanical metaphors. Buttons are pushed, sliders are slid, windows are dragged, icons are dropped, panels extend and retract. People are encouraged to consider software a machine—when a button is pressed, invisible gears grind and whir, and some internal or external state is changed. Manipulation of machines is the domain of industrial design.

#When the software designer defines the interactive aspects of her program, when she places these pseudo-mechanical affordances and describes their behavior, she is doing a virtual form of industrial design. Whether she realizes it or not.

#The software designer can thus approach her art as a fusion of graphic design and industrial design. Now, let’s consider how a user approaches software, and more importantly, why.

What is software for?

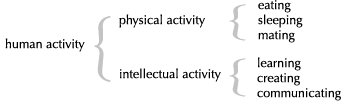

#Software is for people. To derive what software should do, we have to start with what people do. Consider the following taxonomy of human activity:** There are any number of ways of breaking down the spectrum of human activity. I don’t claim that the subdivision given here is definitive, but that it’s useful. Consider it a set of basis vectors into the space of human activity. Different basis sets are helpful for reasoning about different problems, but they all describe the same space.

#At the present, software can’t do much for physical needs—if your avatar eats a sandwich, you remain hungry. But people are increasingly shifting their intellectual activities to the virtual world of the computer. This suggests three general reasons why a person will turn to software:

- To learn.

- To create.

- To communicate.

#I propose that software can be classified according to which of these needs it serves. I will call these categories information software, manipulation software, and communication software.

#Information software serves the human urge to learn.  A person uses information software toconstruct and manipulate a model that is internal to the mind—a mental representation of information. Good information software encourages the user to ask and answer questions, make comparisons, and draw conclusions. A person would use recipe software, for example, to decide what to cook for dinner. She would learn about various dishes (where “learning” could be as informal as a quick skim for something tasty that contains ingredients on hand), compare her options, and make her decision. In effect, she is constructing an internal understanding of culinary possibilities, and mentally prodding this model to reveal the optimal choice. It’s the same effect she would hope to achieve by consulting a recipe book.

A person uses information software toconstruct and manipulate a model that is internal to the mind—a mental representation of information. Good information software encourages the user to ask and answer questions, make comparisons, and draw conclusions. A person would use recipe software, for example, to decide what to cook for dinner. She would learn about various dishes (where “learning” could be as informal as a quick skim for something tasty that contains ingredients on hand), compare her options, and make her decision. In effect, she is constructing an internal understanding of culinary possibilities, and mentally prodding this model to reveal the optimal choice. It’s the same effect she would hope to achieve by consulting a recipe book.

#Manipulation software serves the human urge to create.  A person uses manipulation software toconstruct and manipulate a model external to herself—a virtual object represented within the computer, or a remote physical object. Some examples include software for drawing, writing, music composition, architectural design, engineering design, and robot control. Manipulation software can be considered a virtual tool—like a paintbrush or typewriter or bandsaw, it is used as an interface between creator and artifact.

A person uses manipulation software toconstruct and manipulate a model external to herself—a virtual object represented within the computer, or a remote physical object. Some examples include software for drawing, writing, music composition, architectural design, engineering design, and robot control. Manipulation software can be considered a virtual tool—like a paintbrush or typewriter or bandsaw, it is used as an interface between creator and artifact.

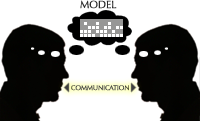

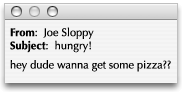

#Communication software serves the human urge to communicate.  A person uses communication software to construct and manipulate an internal model that is shared with others—an understanding synchronized across multiple minds. Examples include software for email, group discussions (whether voice, video, or text), and collaborative working. In terms of raw mechanics, communication can be thought of as creating a response to information learned—that is, the external model manipulated by the speaker is the internal model learned by the listener. Thus, this paper will simply treat communication software as manipulation software and information software glued together, and mention it no further.** This dismissal is rather disingenuous—communication software is fundamentally unlike the other two because its user is a group, and a group as a whole can have different goals than any of its constituents individually. The considerations of social software design are well beyond the scope of this paper, but see Clay Shirky’s essays, particularly Social Software and the Politics of Groups(2003). This design approach is widespread—email software typically has separate reading and writing modes; messageboards similarly segregate browsing and posting.

A person uses communication software to construct and manipulate an internal model that is shared with others—an understanding synchronized across multiple minds. Examples include software for email, group discussions (whether voice, video, or text), and collaborative working. In terms of raw mechanics, communication can be thought of as creating a response to information learned—that is, the external model manipulated by the speaker is the internal model learned by the listener. Thus, this paper will simply treat communication software as manipulation software and information software glued together, and mention it no further.** This dismissal is rather disingenuous—communication software is fundamentally unlike the other two because its user is a group, and a group as a whole can have different goals than any of its constituents individually. The considerations of social software design are well beyond the scope of this paper, but see Clay Shirky’s essays, particularly Social Software and the Politics of Groups(2003). This design approach is widespread—email software typically has separate reading and writing modes; messageboards similarly segregate browsing and posting.

Manipulation software design is hard

#Manipulation software generally displays a representation of an object—the model—which the user directly manipulates with pseudo-mechanical affordances. Because manipulation is the domain of industrial design, manipulation software emphasizes industrial design aspects.

#Consider a tool for laying out a small newspaper. The user will spend most of her time performing a number of pseudo-physical operations—writing, drawing, cutting, moving, rotating, stretching, cropping, layering—within a virtual space. The primary design challenge, just as with any industrial design, is to provide affordances that make these mechanical operations available, understandable, and comfortable. However, in a physical space, each operation would use a specialized tool. Designing a “mega-tool” that cleanly incorporates all operations (and flattens them into two dimensions, and uses only the gestures “click” and “drag”) is a significant challenge indeed.

#Although manipulation is the focus, good manipulation software must provide superb visualization as well. This establishes the feedback loop that is critical for all creative activity—the manipulator must see the effects of her manipulation. Thus, manipulation software design is also a significant graphic design challenge.

#For example, the newspaper editor needs to see what a page looks like—close-up, from a distance, and in relation to other pages—and how it would look in a variety of other configurations. She wants to see misspelled words, lines that are poorly justified or hyphenated, and widows and orphans. She wants to see columns that are short or overlong, and how they can be corrected by changing column width or leading. She wants to know what stories and ads are still on the table, their sizes, and how they can be fit in. She wants to know how recently and how often stories about a given topic have run, and how readers have responded. She wants to know past response to a given ad, as a function of the topics or authors of the stories it was coupled with. Finally, the presentation of all this information must not distract the editor from the primary task of manipulating the layout.

#Furthermore, the industrial and graphic designs in manipulation software must be in intimate synergy, since it is the graphic design which describes how the object can be manipulated—the mechanical affordances are graphical constructs. Even more graphically challenging is manipulation of abstract objects, such as music or financial data, where the graphical representation must show not only what can be done with it, but what it is in the first place.** As opposed to painting software, for instance, where the graphical representation can be the artifact itself. This is not a pipe, but it’s close enough.

#Because of these intertwined design challenges, the design of excellent manipulation software isunbelievably difficult, and mustn’t be underestimated. Fortunately, for an enormous class of software, manipulation is not only largely unnecessary, but best avoided.

Most software is information software

#J.C.R. Licklider once examined how he spent his research time:

#In the spring and summer of 1957… I tried to keep track of what one moderately technical person [myself] actually did during the hours he regarded as devoted to work… About 85 per cent of my “thinking” time was spent getting into a position to think, to make a decision, to learn something I needed to know. Much more time went into finding or obtaining information than into digesting it. Hours went into the plotting of graphs, and other hours into instructing an assistant how to plot. When the graphs were finished, the relations were obvious at once, but the plotting had to be done in order to make them so… Throughout the period I examined, in short, my “thinking” time was devoted mainly to activities that were essentially clerical or mechanical: searching, calculating, plotting, transforming, determining the logical or dynamic consequences of a set of assumptions or hypotheses, preparing the way for a decision or an insight.** J.C.R. Licklider, “Man-Computer Symbiosis” (1960).

#For Licklider and other early visionaries such as Vanevar Bush and Doug Engelbart,** See Bush’s paper “As We May Think” (1945) and Engelbart’s paper “Augmenting Human Intellect” (1962). the ideal of the then-hypothetical personal computer was a brain supplement, enhancing human memory and amplifying human reasoning through data visualization and automated analysis. Their primary concern was how a machine could help a person find and understand relevant knowledge. Although they were generally discussing scientific and professional work, their prescience fully applies in the modern home.

#Most of the time, a person sits down at her personal computer not to create, but to read, observe, study, explore, make cognitive connections, and ultimately come to an understanding. This person is not seeking to make her mark upon the world, but to rearrange her own neurons. The computer becomes a medium for asking questions, making comparisons, and drawing conclusions—that is, forlearning.

#People turn to software to learn the meaning of words, learn which countries were bombed today, and learn to cook a paella. They decide which music to play, which photos to print, and what to do tonight, tomorrow, and Tuesday at 2:00. They keep track of a dozen simultaneous conversations in private correspondence, and maybe hundreds in public arenas. They browse for a book for Mom, a coat for Dad, and a car for Junior. They look for an apartment to live in, and a bed for that apartment, and perhaps a companion for the bed. They ask when the movie is playing, and how to drive to the theater, and where to eat before the movie, and where to get cash before they eat. They ask for numbers, from simple sums to financial projections. They ask about money, from stock quote histories to bank account balances. They ask why their car isn’t working and how to fix it, why their child is sick and how to fix her. They no longer sit on the porch speculating about the weather—they ask software.

#Much current software fulfilling these needs presents mechanical metaphors and objects to manipulate, but this is deceiving. People using this software do not care about these artificial objects; they care about seeing information and understanding choices—manipulating a model in their heads.

#For example, consider calendar or datebook software. Many current designs center around manipulating a database of “appointments,” but is this really what a calendar is for? To me, it is about combining, correlating, and visualizing a vast collection of information. I want to understand what I have planned for tonight, what my friends have planned, what’s going on downtown, what’s showing when at the movie theater, how late the pizza place is open, and which days they are closed. I want to see my pattern of working late before milestones, and how that extrapolates to future milestones. I want to see how all of this information interrelates, make connections, and ultimately make a decision about what to do when. Entering a dentist appointment is just a tedious minor detail, and would even be unnecessary if the software could figure it out from my dentist’s confirmation email. My goal in using calendar software to ask and answer questions about what to do when, compare my options, and come to a decision.

#Consider personal finance software. Entering and classifying my expenses is, again, tedious and unnecessary manipulation—my credit card already tracks these details. I use the software tounderstand my financial situation and my spending habits. How much of my paycheck goes to rent? How much to Burrito Shack? If I give up extra guacamole on my daily burrito, will I be able to buy a new laptop? What is my pattern of Christmas spending, and will I have to cut back if I don’t take any jobs for a month? If I buy a hybrid car, how much will I save on gas? I want to ask and answer questions, compare my options, and let it guide my spending decisions.

#Consider an online retailer, such as Amazon or Netflix. The entire purpose of the website—the pictures, ratings, reviews, and suggestions—is to let me find, understand, and compare their offerings. The experience is about building a decision inside my head. In the end, I manipulate a shopping cart, but that is merely to put my mental process to effect, to reify the decision. At the best retailers, this manipulation is made as brief as possible.

#Even consider reading email. Most current designs revolve around the manipulation of individual messages—reading them one-by-one, searching them, sorting them, filing them, deleting them. But the purpose of reading email has nothing to do with the messages themselves. I read email to keep a complex set of mental understandings up-to-date—the statuses of personal conversations, of projects at work, of invitations and appointments and business transactions and packages in the mail. That this information happens to be parceled out in timestamped chunks of text is an implementation detail of the communication process. It is not necessarily a good way to present the information to a learner.

#Similar arguments can be made for most software. Ignore the structure of current designs, and ask only, “Why is a person using this?” Abstracted, the answer almost always is, “To learn.”

#So far, this categorization has just been an exercise in philosophy. But this philosophy suggests a very practical approach to software design.

Information software design is graphic design

#It might seem like I’m demanding a lot from my software. But it’s not deep magic—no simulations of complex phenomena, no effects on the external world, certainly no sentience or spark of life. I’m asking for software to display a complex set of data in a way that I can understand it and reason about it. This is a well-established problem; it’s the raison d’etre of information graphic design. My demands are perfect examples of graphic design challenges.

#A well-designed information graphic can almost compel the viewer to ask and answer questions, make comparisons, and draw conclusions. It does so by exploiting the capabilities of the human eye: instantaneous and effortless movement, high bandwidth and capacity for parallel processing, intrinsic pattern recognition and correlation, a macro/micro duality that can skim a whole page or focus on the tiniest detail. Meanwhile, a graphic sidesteps human shortcomings: the one-dimensional, uncontrollable auditory system, the relatively sluggish motor system, the mind’s limited capacity to comprehend hidden mechanisms. A graphic presents no mechanisms to comprehend or manipulate—it plugs directly into the mind’s spatial reasoning centers.

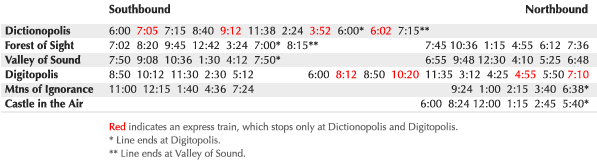

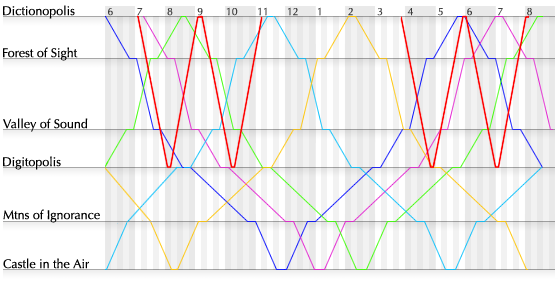

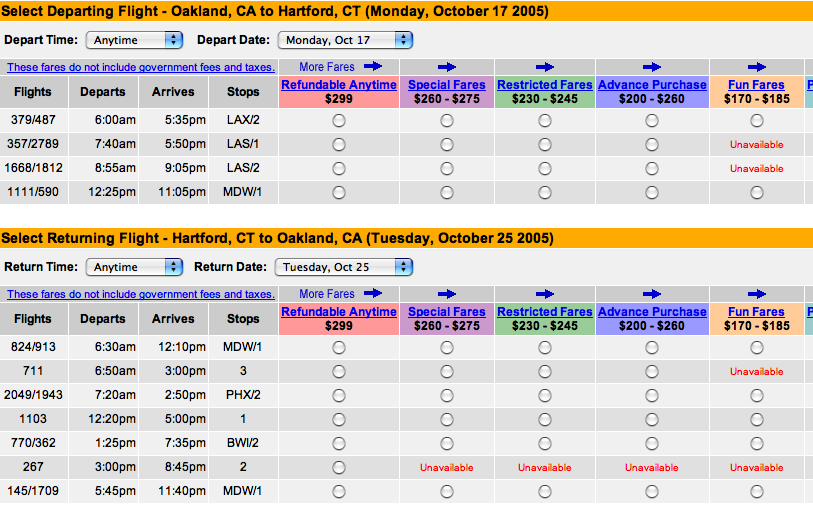

#For example, consider this train timetable:

#This design may be adequate for commuters, whose questions mostly concern when trains arrive at stations. But train system operators have a different set of questions: Where exactly are the trains at any given time? How fast are they moving? Where do two trains cross? (They better not be on the same track at that point!) Where are the trains at the start of the day, and where do they end up at night? If a train is delayed, how do all these answers change? Like some of the software questions above, these questions seem very difficult to answer. But consider this revised timetable design:

#Each train is represented by a distinctly-colored line, with distance along the track plotted vertically and time horizontally. The slope of the line represents the train’s direction and speed; horizontal sections are stops. This graphic incorporates no more data than the previous one, yet all of the operators’ questions are answered at a glance. Important features such as crossings are emphasizedsimply because the eye is naturally drawn toward line intersections. Footnotes are unnecessary; the exceptions are no longer exceptional when seen in context. Should a train be delayed, all revised stops and crossings can be “calculated” simply by drawing a new line.** Graphical train timetables date from the late 1800s. For the origin of this and other classic graphical forms, see Howard Wainer’s book Graphic Discovery (2005).

#Compared to excellent ink-and-paper designs, most current software communicates deplorably. This is a problem of surface, but not a superficial problem. The main cause, I believe, is that many software designers feel they are designing a machine. Their foremost concern is behavior—what the software does. They start by asking: What functions must the software perform? What commands must it accept? What parameters can be adjusted? (In the case of websites: What pages must there be? How are they linked together? What are the dynamic features?) These designers start by specifying functionality, but the essence of information software is the presentation.

#It must be mentioned that there is a radically alternative approach for information software—games. Playing is essentially learning throughstructured manipulation—exploration and practice instead of pedagogic presentation. Despite the enormous potential for mainstream software, accidents of history and fashion have relegated games to the entertainment bin, and the stigma of immaturity is tough to overcome. (The situation is similar for graphic novels.) Raph Koster’s Theory of Fun for Game Design (2004) and James Paul Gee’sWhat Video Games Have To Teach Us About Learning and Literacy (2003) deal directly with games as learning tools. Salen and Zimmerman’s Rules of Play (2003) and Chris Crawford’s Art of Interactive Design (2003) and Chris Crawford on Game Design (2003) discuss learning through play in a broader context.I suggest that the design of information software should be approached initially and primarily as a graphic design project. The foremost concern should be appearance—what and how information is presented. The designer should ask: What is relevant information? What questions will the viewer ask? What situations will she want to compare? What decision is she trying to make? How can the data be presented most effectively? How can the visual vocabulary and techniques of graphic design be employed to direct the user’s eyes to the solution? The designer must start by considering what the software looks like, because the user is using it to learn, and she learns by looking at it.

#Instead of dismissing ink-and-paper design as a relic of a previous century, the software designer should consider it a baseline. If information software can’t present its data at least as well as a piece of paper, how have we progressed?

Demonstration: Showing the data

#Edward Tufte’s first rule of statistical graphic design is, “Show the data.” All information graphics, statistical or not, must present the viewer with enough information to answer her questions. It seems that many software designers, in their focus on functionality, forget to actually present the data.

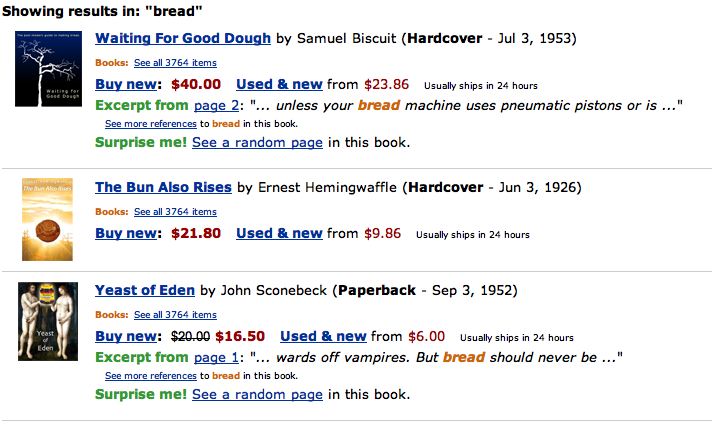

#Consider the information presented when searching a popular online bookstore.** Based on amazon.com as of January 2006.

#There are a number of graphic design criticisms one could make—the uniform text size and weight results in a solid, oppressive mass; the abundance of saturated primary colors gives a distracting, carnival-like appearance; the text is spread all over the page, giving the eye no well-defined path to follow. However, the most egregious problem is simply that there is not enough information to make any sort of decision.

#The user’s goal is to find the best book about some particular topic. Given that the books shown are presumably related to this topic, what questions does the user have?

- Is the book appropriate? That is, what is it about, and do I care?

- Is the book good? That is, what did other people think of it, and do I trust them?

#The answers will be used to compare the available books, and decide upon one to follow up on and possibly buy.

#Unfortunately, these questions are completely unaddressed by the information provided. To see relevant information, the user must click on each listing individually. That is, she must navigate byhand instead of by eye, and must use her memory to compare information across time instead ofspace.

#The problem is that this graphic was designed as an index into a set of webpages, but is used as acatalog for comparing a set of books. The purpose of this graphic should not be to return a list of query matches, but to help the user learn about books related to her topic of interest.

#Consider this redesign:

#Is a book appropriate? A synopsis and table of contents give an overview of the book’s contents. Is a book good? A rating and reviews indicate popular opinion. Because all of this information is on a single page, it can be compared by eye, with no need for memory.

#The standard 5-star rating system is information-weak—it gives only an average. It can be enhanced with whiskers underneath that indicate the distribution of ratings. This allows the viewer to differentiate between a book that was unanimously judged middling  and one that was loved and hated

and one that was loved and hated  —these are both 3-star ratings, but have very different meanings. The viewer can also see whether a highly-rated book got any bad reviews; in a sea of praise, criticism often makes enlightening reading. As a whole, the whiskers give a visual indication of the number of ratings, which reflects the trustworthiness of the average. The whiskers are unobtrusive, and can easily be ignored by viewers who don’t care about distribution.

—these are both 3-star ratings, but have very different meanings. The viewer can also see whether a highly-rated book got any bad reviews; in a sea of praise, criticism often makes enlightening reading. As a whole, the whiskers give a visual indication of the number of ratings, which reflects the trustworthiness of the average. The whiskers are unobtrusive, and can easily be ignored by viewers who don’t care about distribution.

# Text weight and color is used to emphasize important information and call it out when skimming. Text in grey can be read when focused upon, but disappears as background texture when skimming. All critical information is contained in a column with the width of an eyespan, with a picture to the left and supplementary information to the right. The viewer can thus run her eye vertically down this column; when she spots something interesting, she will slow down and explore horizontally.

Text weight and color is used to emphasize important information and call it out when skimming. Text in grey can be read when focused upon, but disappears as background texture when skimming. All critical information is contained in a column with the width of an eyespan, with a picture to the left and supplementary information to the right. The viewer can thus run her eye vertically down this column; when she spots something interesting, she will slow down and explore horizontally.

#The user wants to see books related to a topic in her head. But ideas in the head are nebulous things, and may not translate perfectly to a concrete search term. For this reason, a mini-list of related books is provided for each book.  This is similar to a “related words” section in a thesaurus listing—it allows the user to correct a near miss, or veer off in a tangential but intriguing direction.

This is similar to a “related words” section in a thesaurus listing—it allows the user to correct a near miss, or veer off in a tangential but intriguing direction.

#Conventional software designers will worry about functionality—how does the user interact with this graphic? Clearly, other than the “related books” listing, a click anywhere in a book’s section should reveal details and purchasing options. What else could the user mean by clicking? It’s analogous to pulling the book off a physical shelf.

#This is a significant redesign over the original; yet, I consider it a conservative one. A more ambitious design could surely show even more data, perhaps allowing the user to browse within the book or fully explore the space of related books. A world of possibilities opens up with a simple change of mindset. This is not a list of search results—it is an information graphic. It is for learning.

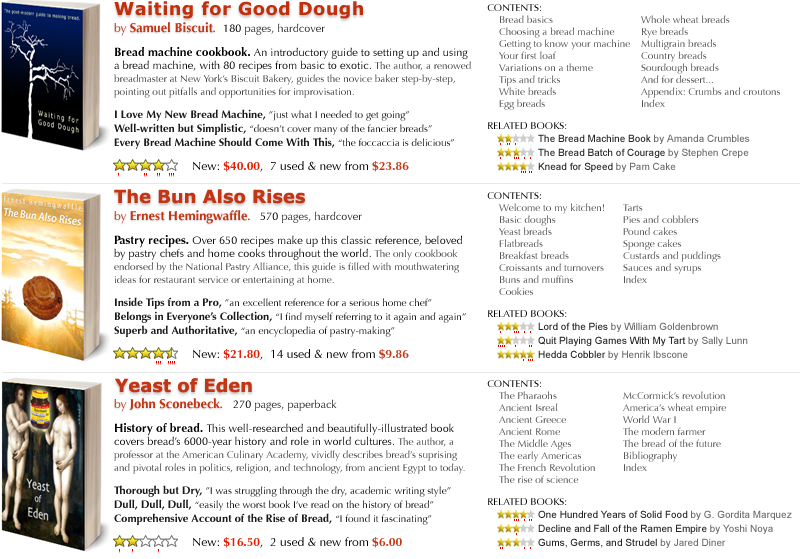

Demonstration: Arranging the data

#Just as important as what data is shown is where it is shown. Unlike the words in a paragraph, the elements in a graphic can be deliberately placed to encourage spatial reasoning. Unfortunately, most software graphics are arranged to maximize aesthetics, not to bring out useful relationships in the data. (That is, when any skilled thought is given to appearance at all.)

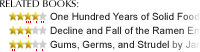

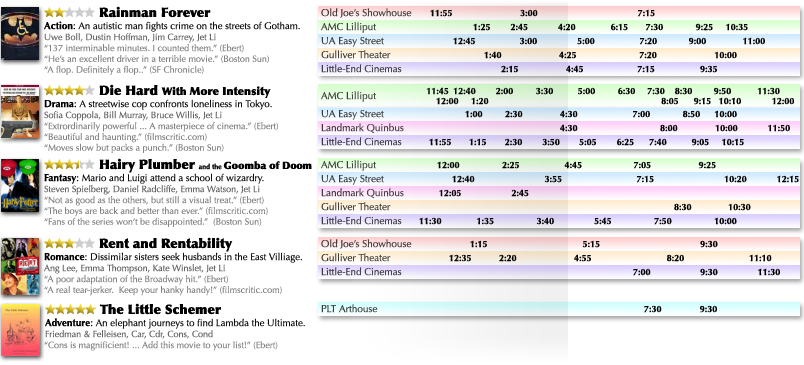

#Consider this excerpt of a graphic for browsing nearby movie showings:** Based on movies.yahoo.com as of January 2006.

#If a person is in the mood for a movie, what questions might she have?

- What movies are showing today, at which times?

- What movies are showing around a particular time?

- Where are they showing?

- What are they about?

- Are they good?

#The user will use the answers to compare the available movie showings and decide upon one to go see.

#Although the above graphic clearly has an information deficiency (What are these movies about? Are they good?), the worst problem is that the data is not arranged in any useful manner. Understanding which movies are playing when involves scanning a pageful of theaters, extracting movies of interest and mentally merging their showtimes. A viewer’s eye might leap erratically around the screen as she compares showtimes of a given movie at six theaters, trying to find the one that best fits her dinner plans.

#The primary question is, “What movies are showing today, at which times?” Given the two spatial dimensions available to us, this should suggest a graphic with movies along one axis and times along the other.

#Consider this redesign:

#As with the bookstore redesign, enough information is given about each movie to determine its content and quality, although films have enough external marketing that the intent is more to remindthan introduce. Text weight is again employed to make critical information stand out and supplementary information disappear until focused upon.

#More interesting is the chart on the right, which plots movie showings by time. To find all movie showings around a particular time, the viewer simply scans her eye vertically down the page. If she is only interested in a particular movie, she looks only within that movie’s range. The current time is indicated by shading the past, providing a springboard for the viewer’s eye; in this example, it is about 4:45.

#The original design grouped movies by theater; this redesign groups theaters by movie.** I assume that Yahoo! simply mimicked the newspapers, and newspapers arrange by theater for business reasons. The assumption is that the viewer would rather see a particular movie at any theater than any movie at a particular theater. However, to ease correlation of the various movies offered at a given theater, each theater is color-coded. If the viewer prefers to avoid the Gulliver Theater because of sticky floors, the consistent yellow background may help her filter out its showtimes.

#No theater addresses are shown. The viewer is likely to be familiar with the theaters in her area, and if she isn’t, a simple address would be useless without a map or directions. Presumably, a mouse click or hover over a theater’s name would reveal this information, or perhaps it could be displayed elsewhere on the page.

#This demonstration and the previous one have attempted to illustrate the power of approaching information software as graphic design, instead of as styling the regurgitation of a database. To design excellent software, however, this mindset is necessary but insufficient. Something major is missing.

#Very little in the above designs is software-specific. For the most part, the designs would work almost as well on paper. Modern magic shouldn’t merely match our ancient technology—it should surpass it. We’ve seen how graphic design can improve software, but how can software improve graphic design?

#The answer lies with context.

Context-sensitive information graphics

#Print has one supreme flaw: ink is indelible. An ink-and-paper design is static—it must display all its data, all the time. However, a reader typically only cares about a subset relevant to her current situation. The designer is faced with the challenge of organizing the data so that hopefully mutually-relevant subsets are grouped together, and the reader has the challenge of visually or physically navigating through the entire data space to find the group of interest.

#For example, a rider consulting a bus schedule must comb through a matrix of times and stations to find the single relevant data point—the time of the next bus.** And then, she must consult her watch and do some arithmetic to calculate the information she actually cares about—how long she will be waiting. Any driver who’s been lost in an unfamiliar city knows the frustration of locating the immediate vicinity on a roadmap. And a reader consulting an encyclopedia must not only find the right entry on the page and the right page in the book, but even the right book on the shelf! These are consequences of static graphics. Because ink is permanent, the reader must navigate through lots of paper.

#The modern computer system provides the first visual medium in history to overcome this restriction. Software can:

- infer the context in which its data is needed,

- winnow the data to exclude the irrelevant, and

- generate a graphic which directly addresses the present needs.

#Liberating us from the permanence of publication is the undersung crux of the computer—the dynamic display screen. Its pixels are magic ink—capable of absorbing their context and reflecting a unique story for every reader. And the components surrounding the display—CPU, storage, network, input devices—are its peripherals for inferring context.

#Information software design, then, is the design of context-sensitive information graphics. Unlike conventional graphics, which must be suitable for any reader in any situation, a context-sensitive graphic incorporates who the user is and what exactly the user wants to learn at the moment. Context allows software to winnow its data space to the subset of information that the user cares about, and present the data in such a way that the user’s current questions can best be answered.

#All information software consists of context-sensitive graphics, whether the designer realizes it or not. For example, the list of query results from an internet search engine is a context-sensitive information graphic. The software’s data space consists of all the websites in the world. This is winnowed down to a dozen, using context that is inferred entirely from the search term contributed by the user.** Clicking a “next” button contributes further context—dissatisfaction with the first set of results. Despite its enormous data space, this software restricts itself to a meager scrap of context, impersonal and imprecise.

#There are, in fact, three sources from which software can infer context:

- Environment involves sensing the current state of the world.

- History involves remembering the past.

- Interaction involves soliciting input from the user.

Inferring context from the environment

#A person determines her surroundings through the five human senses. Software doesn’t operate in a vacuum, either; through connections to hardware and other software, it can sense much about the user’s situation. Some examples of context clues in the software’s environment include:

-

#Date and time. Time is one of the fundamental dimensions along which we organize our lives, and in any data space with a temporal dimension, “now” is almost always the prime landmark. Because users often seek information on demand, information related to “now” or “soon” is often the most relevant. Fortunately, every general-purpose computer knows when “now” is. A person using a software bus schedule, for example, should never have to hunt for the next bus.

-

#Geographical location. Similarly, the most interesting spatial landmark is usually “here.” Unfortunately, this currently can be harder to determine automatically, but the payoff is enormous.** I believe that location is such vital context, Powerbooks should come withGPS receivers pre-installed, with an easy software API. Developers would then write software to take advantage of it, and other computer makers would follow suit. Someday, a computer without GPS might seem as silly as a computer without a clock. Obviously, a software roadmap needs to know the user’s location, but so does the bus schedule, as well as business listings, transportation planners, travel guides, and much other information software.

-

#Physical environment. Given a time and location, many details of the physical environment, such as the weather, are just a network connection away. Consider a travel guide that suggests parks when sunny and museums when rainy.

-

#Other information software, such as open websites. By reading some information, the user is indicating a topic of interest. All other information software should take heed. Consider a person reading the website of an upcoming stage play. When she opens her calendar, the available showings should be marked. When she opens a map, she should see directions to the playhouse. When she opens a restaurant guide, she should see listings nearby, and unless the play offers matinees, they shouldn’t be lunch joints.

-

#Documents created with manipulation software. Creating some information indicates an even stronger topic of interest. Consider a person who requests information about “cats” while writing a paper. If the paper’s title is “Types and Treatment of Animal Cancer,” the information should skew toward feline medical data. The title “History of Egypt” indicates interest in ancient feline worship instead. And if the paper contains terms related to building construction, “cats” probably refers to the decidedly non-feline Caterpillar heavy machinery.** This example is from Budzik and Hammond’s paper User Interactions with Everyday Applications as Context for Just-in-time Information Access(2000).

-

#Email. Names, addresses, and phone numbers in recent email clearly constitute valuable hints. A recipient who opens a calendar should find the sender’s schedule juxtaposed with her own. When she opens a map, addresses in the email should be marked. But beyond that, recent correspondence can indicate current activities, and an email archive as a whole can describe the user’s characteristics and interests. Consider a person who requests information about “racing.” The fields of running, bicycles, and cars have distinct sets of terminology; if one set regularly shows up in the person’s conversations, “racing” isn’t so ambiguous.

#All software lives within an environment, rich with evidence of context. Using software that doesn’t look outside itself is like conversing with a blind person—constantly describing what is plainly visible.** Some of the suggestions given here may seem daunting (or infeasible) to an engineer. Implementation will be discussed later in the paper.

#On the other hand, the power of the environment is multiplied when it is correlated with the past—that is, when the software makes use of history.

Inferring context from history

#A human doesn’t just use her senses to recognize her situation; she also uses memories of past situations. Software, too, can use its memory to understand the present. The current context, or a good approximation, can often be predicted from a history of past environments and interactions.

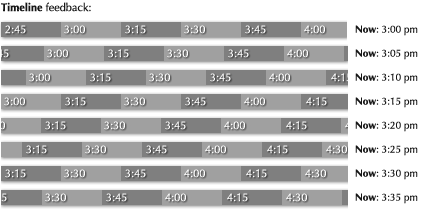

#Last-value predictors represent the simplest form of prediction. They simply predict the current context to be the same as the previous one. This is reasonable in many situations where the user’s context is fairly static, changing slowly over the short term. For example, if yesterday, the user looked for one-bedroom apartments in North Berkeley, she is is probably still interested in one-bedroom apartments in North Berkeley today. If nothing else, the software should present this information immediately, without asking for details.

#Last-value prediction is frequently thought of and implemented as manipulation of explicit state—that is, the context is a persistent object that remains as is unless changed by the user, so the software always appears as the user left it.** The engineering challenge then becomes merely persisting across invocations of the program. Often, not even this is bothered with. This stateful conceptual model mimics physical reality, and can be comfortable if the user cares enough about the software’s state to keep her own mental state in sync. However, this is often not the case with information software, especially software that is consulted intermittently. (If you put down a newspaper for a few hours, you won’t be distressed to find it on a different page when you return. You probably won’t even notice. On the other hand, you would be delighted if you often came back to find it on exactly the page you wanted to read.) By thinking of this as context prediction instead of state maintenance, the door is opened to more sophisticated predictors.

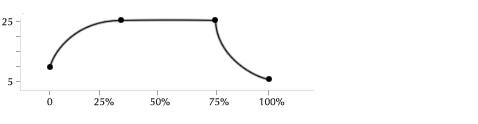

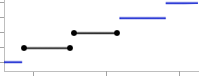

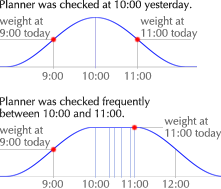

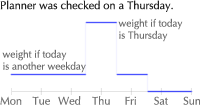

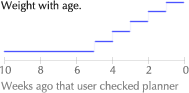

#Learning predictors attempt a deeper understanding of the user. They construct a model to explain past contexts, and use the inferred relationships to predict the current context.

#One simple approach to learning is to discover a common attribute of recent contexts, and narrow the current context along that attribute’s dimension. For example, in a music library, as the user chooses several bluegrass songs in a row, the software can graphically emphasize other songs in this genre. With further confidence, it might consider de-emphasizing or omitting songs outside of the genre. As another example, consider a user who requests information about “Lightwave,” then about “Bryce,” then “Blender.” These terms have many meanings individually, but as a group they are clearly names of 3D rendering software packages. A subsequent search for “Maya,” another 3D package, should not display information about the ancient civilization. In fact, information about Maya could be presented automatically.

#Another simple approach is to establish the user’s velocity through the data space. If a person asks a travel guide about the Grand Canyon on one day, and Las Vegas the next day, the following day the software might suggest attractions around Los Angeles.** A better travel guide would suggest skipping Los Angeles.

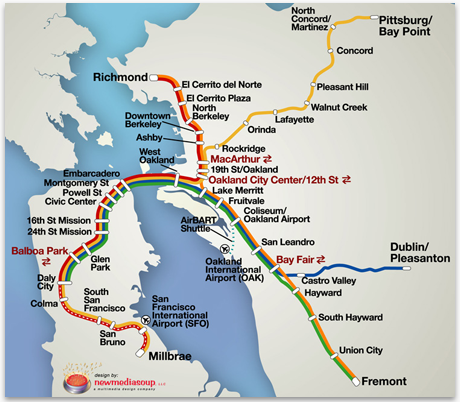

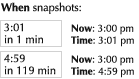

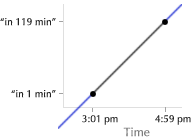

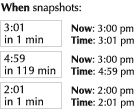

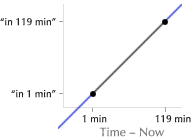

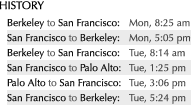

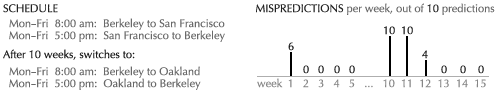

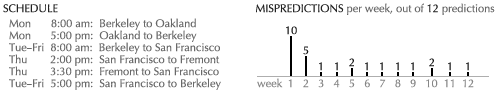

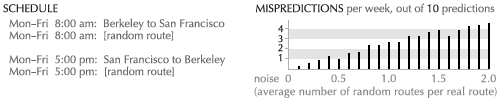

#In general, the problem is one of inferring a pattern that explains the user’s interests as a function of the environment, and extrapolating along the pattern to classify the current environment. As an example of general pattern modeling, consider a person who, as a byproduct of traveling to work, always checks the train schedule from Berkeley to San Francisco in the morning, and San Francisco to Berkeley in the evening. If the software can discover and model this pattern, it can present the appropriate information at each time without the user having to request it. When she looks in the morning, she sees by default the San Francisco-bound schedule; in the evening, the Berkeley-bound schedule.** Again, this may sound daunting to an engineer. Implementation will be discussed later in the paper.

#Large histories can enable some very sophisticated predictors, especially if it is possible to reach into the environment and correlate with other users’ histories. For example, by asking their users to rate each movie they return, Netflix is able to infer some enormously valuable context—each user’s taste. This allows them to winnow an enormous dataset (their catalog of movies) down to a dozen data points (movies the user hasn’t seen, which were enjoyed by people with similar taste), which can be presented in a single, navigation-free graphic. The winnowing is impressively on-target—two-thirds of users’ selections come from recommendations.** Laurie J. Flynn, Like This? You’ll Hate That. New York Times, Jan. 23, 2006. TiVo similarly uses a collaborative predictor to infer which television programs the user would be interested in. These are presented on a “suggestions” page, and recorded automatically when possible.** For technical details, see Ali and van Stam’s paper TiVo: Making Show Recommendations Using a Distributed Collaborative Filtering Architecture(2004).

#Amazon, iTunes, and an increasing number of other online retailers are currently incorporating similar schemes. However, with the exception of the lowly junk-mail filter, non-retail information software that learns from history is still rare. Typically, users can only hope for last-value prediction, if that. Most software wakes up each day with a fresh case of amnesia.

#Unfortunately, software that doesn’t learn from history dooms users to repeat it. And repeat it they will—tediously explaining their context, mouse click by mouse click, keystroke by keystroke, wasted hour by wasted hour. This is called interactivity.

Interactivity considered harmful

#Chris Crawford defines interaction as a three-phase reciprocal process, isomorphic to a conversation: an interactant listens to her partner, thinks about what was said, and speaks a response. Her partner then does the same.** See Crawford’s book The Art of Interactive Design (2003), or his essayFundamentals of Interactivity (1993). For manipulation software, interaction is perfectly suitable: the user views a visual representation of the model, considers what to manipulate next, and performs a manipulation. The software, in turn, inputs the user’s manipulation request, updates the model, and displays the updated representation. With good feedback and an effective means of “speaking” to the software, this process can cycle smoothly and rapidly. It mimics the experience of working with a physical tool.

#Information software, by contrast, mimics the experience of reading, not working. It is used for achieving an understanding—constructing a model within the mind. Thus, the user must listen to the software and think about what it says… but any manipulation happens mentally.** Except possibly for signaling a decision, such as clicking a “buy” button, but that concludes, notconstitutes, a session. The only reason to complete the full interaction cycle and speak is to explicitly provide some context that the software can’t otherwise infer—that is, to indicate a relevant subset of information. For information software, all interaction is essentially navigation around a data space.

#For example, Amazon’s data space consists of their catalog of items. For a yellow pages directory, the data space contains all business listings; for a movie guide, all showtimes and movie information; for a flight planner, trips to and from all airports. In all of these cases, every interaction, every click and keystroke, search term and menu selection, simply serves to adjust the user’s view into the data space. This is simply navigation.

#Alan Cooper defines excise in this context as a cognitive or physical penalty for using a tool—effort demanded by the tool that is not directly in pursuit of a goal. For example, filling a gas tank is done to support the car, not the goal of arriving at a destination. Cooper goes on to assert that software navigation is nothing but excise:

#…the most important thing to realize about navigation is that, in almost all cases, it represents pure excise, or something close to it. Except in games where the goal is to navigate successfully through a maze of obstacles, navigation through software does not meet user goals, needs, or desires. Unnecessary or difficult navigation thus becomes a major frustration to users. In fact, it is the authors’ opinion that poorly designed navigation presents the number-one problem in the design of any software application or system…** Alan Cooper and Robert Reimann,About Face (2003), p143.

#If all interaction is navigation, and navigation is the number-one software problem, interactivity is looking pretty bad already. However, when compared with the other two sources of context, interactivity has even worse problems than simply being a frustrating waste of time:

-

#The user has to already know what she wants in order to ask for it. Software that infers from history and the environment can proactively offer potentially relevant information that the user wouldn’t otherwise know to ask for. Purely interactive software forces the user to make the first move.

-

#The user has to know how to ask. That is, she must learn to manipulate a machine. Donald Norman’s concept of determining a user’s “mental model” has become widespread in the software usability community, and is now considered a core design challenge.** See Donald Norman’s book The Design of Everyday Things (2002), p9. However, Norman described this concept in the context of mechanical devices. It only applies to software if the software actually contains hidden mechanisms that the user must model. A low-interaction, non-mechanical information graphic relieves both user and designer from struggling with mental models.

-

#Navigation implies state. Software that can be navigated is software in which the user can get lost. The more navigation, the more corners to get stuck in. The more manipulable state, the more ways to wander into a “bad mode.” State is the primary reason people fear computers—stateful things can be broken.** The only state kept by a book is which page it is open to, which is why “getting lost in a book” describes apleasurable experience!

#Beyond these cognitive problems are physical disadvantages of interaction. The hand is much slower than the eye. Licklider described spending hours plotting graphs and seconds understanding them. A user who must manually request information is in a similar situation—given the mismatch between mousing and reading speeds, most of her time may be spent navigating, not learning. Further, the user might prefer to learn information while using her hands for other purposes, such as writing or eating or stroking a cat. Each time software demands the user’s hands, this activity must be interrupted. Finally, the growing prevalence of computer-related repetitive stress injuries suggests that indiscriminate interactivity may be considerably harmful in a literal, physical sense.

#Unless it is enjoyable or educational in and of itself, interaction is an essentially negative aspect of information software. There is a net positive benefit if it significantly expands the range of questions the user can ask, or improves the ease of locating answers, but there may be other roads to that benefit. As suggested by the above redesigns of the train timetable, bookstore, and movie listings, many questions can be answered simply through clever, information-rich graphic design. Interaction should be used judiciously and sparingly, only when the environment and history provide insufficient context to construct an acceptable graphic.

#It is unfortunate that the communities concerned with human factors of electronic artifacts have latched onto the term “interaction.”** Most professional communities and academic programs use the term Human-Computer Interaction, or HCI; the ACM special-interest group is CHI, the converse. Many practitioners, following Cooper and Bill Moggridge, refer to their profession as “interaction design.” For information software, the real issue is context-sensitivity.Interaction is merely one means of achieving that. And as long as “speaking” is constrained to awkwardly pushing metaphors with a mouse, interaction should be the last resort.

#The working designer might protest that interaction is unavoidable in practice, and may even consider my ideal of interaction-free software to be a scoff-worthy fantasy. This is only because the alternatives have been unrecognized and underdeveloped. I believe that with the invention of new context-sensitive graphical forms and research into obtaining and using environment and history, the clicking and dragging that characterizes modern information retrieval will be made to seem laughably archaic. But every condonation of “interactivity,” from the annals of academia to the corporate buzzvocabulary, postpones this future.

Reducing interaction

#When the user is forced to interact, the software assumes the form of manipulation software. The external model, manipulated through navigation, is the software’s model of the context.  However, unlike genuine manipulation software, the user does not care about this model—it is merely a means to the end of seeing relevant information.

However, unlike genuine manipulation software, the user does not care about this model—it is merely a means to the end of seeing relevant information.

#The designer’s goal is to let the user adequately shape the context model with as little manipulation as possible. Assuming that graphic design, history, and the environment have been taken as far as they will go, there are a few techniques that can lessen the impact of the remaining interaction:

- Graphical manipulation domains present the context model in an appropriate, informative setting.

- Relative navigation lets the user correct the model, not construct it.

- Tight feedback loops let the user stop manipulating when she’s close enough.

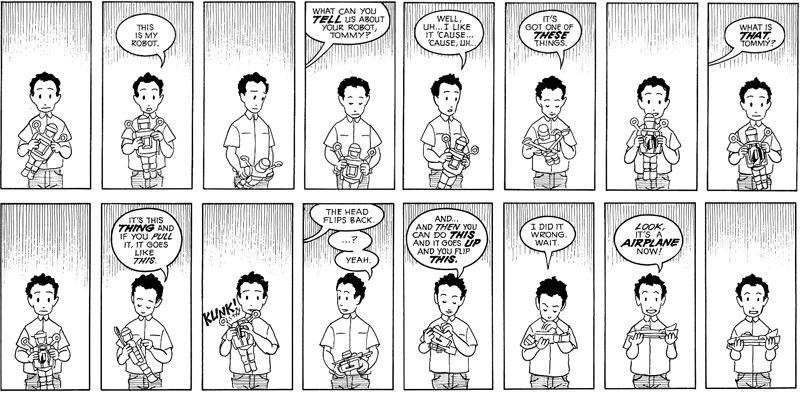

#Graphical manipulation. Command-line systems are criticized for forcing the user to learn the computer’s language. Modern GUIs may be easier to use, but they are not much different in that respect. The GUI language consists of a grammar of menus, buttons, and checkboxes, each labeled with a vocabulary of generally decontextualized short phrases. The user “speaks” by selecting from a tiny, discrete vocabulary within an entirely fixed grammatical structure—a bizarre pidgin unlike any human language, unexpressive and unnatural.** One might wonder what Sapir and Whorf would conclude.

#As an alternative, consider a child describing his toy at “Show and Tell”:** From Scott McCloud’s bookUnderstanding Comics (1994), p138.

#Because the child’s “telling” skills are underdeveloped, he communicates complex concepts throughshowing. Similarly, a GUI’s stunted grammar makes telling tedious, but software’s dynamic display is ideal for showing. A user can specify context by pointing somewhere on an information graphic and saying, “There!”

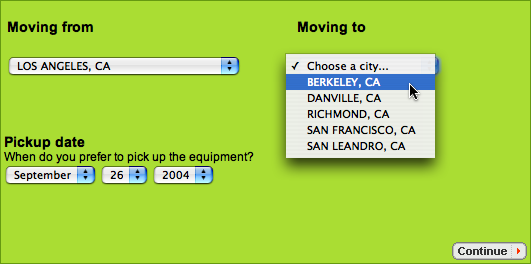

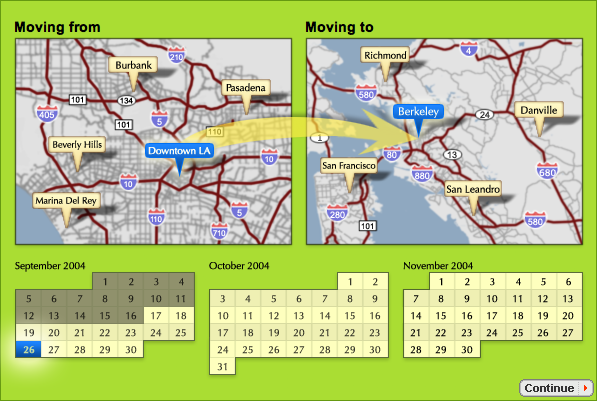

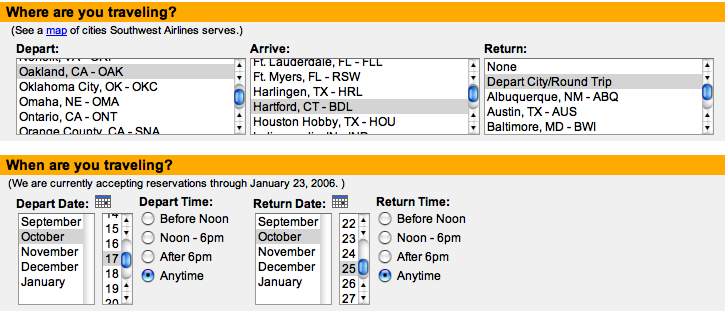

#Two of the most fundamental context dimensions are where and when. For millennia, people have described these concepts with specialized information graphics. But much modern software abandons this tradition, as seen on the website of a popular moving company:** Based on uhaul.com as of January 2006.

#These drop-down menus are awkward and uninformative. Geographical locations belong on maps, and dates belong on calendars. Consider this redesign:

#Even this is not ideal. Locations and dates should be chosen from the user’sown map and calendar. But until platforms that enable such a thing are widespread, software can at least provide temporary ones.

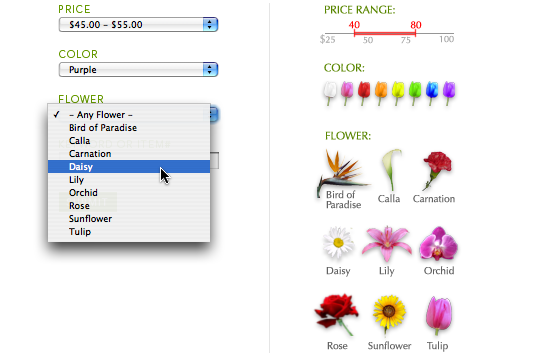

#As an example of more application-specific context, a prominent online flower shop lets the user narrow the view via a set of drop-down menus.** Based on teleflora.com as of January 2006. Compare it with a simple visually-oriented redesign:

#Many types of context can be naturally expressed in some informative graphical domain, relieving the user from manipulating information-free general-purpose controls. Several more examples will be given in the case study below.

#Relative navigation. If the software properly infers as much as possible from history and the environment, it should be able to produce at least a reasonable starting point for the context model. Most of the user’s interaction will then consist of correcting (or confirming) the software’s predictions. This is generally less stressful than constructing the entire context from scratch.

#For example, Google Maps offers both absolute navigation (typing in an address) and relative navigation (panning and zooming the current map). However, it initially displays by default the entire continent; this effectively demands that the user type in an absolute location to get started.** The user can manually specify an initial location, but she will presumably set this to her home. Ironically, her own neighborhood is the least likely place she’ll need mapped. A better design might start at the last place the user looked (last-value prediction), with a nearby list of locations predicted by history (recently visited or manually bookmarked) and the environment (addresses extracted from email, open websites, and calendar software). A reasonable starting point would almost always be a click away, and from there, the user could use relative navigation (dragging and zooming) or simply “navigate” by eye if the graphic is detailed enough.Conceptually, a prediction list might itself be considered relative navigation, as a set of “shortcuts” through the data space.

#An even better design would recognize the prediction list as information software in its own right, and would take steps to show the data (such as annotating the predictions with driving times to and from common locations, taking current traffic conditions into account) and arrange the data (perhaps spatially arranging the predictions on their own map). This might answer most of the user’s questions without any interaction at all.

#Tight feedback loops. Salen and Zimmerman offer a game design perspective on a principle that is crucial for all interactive software:

#If you shoot an asteroid while playing a computer game and the asteroid does not change in any way, you are not going to know if you actually hit it or not. If you do not receive feedback that indicates you are on the right track, the action you took will have very little meaning. On the other hand, if you shoot an asteroid and you hear the sound of impact, or the asteroid shudders violently, or it explodes (or all three!) then the game has effectively communicated the outcome of your action.** Katie Salen and Eric Zimmerman,Rules of Play (2003), p35.

#This principle is universal. If the user clicks a checkbox and nothing happens, her action is rendered ambiguous or even meaningless. She cannot evaluate a response and let it guide her next action. In terms of Crawford’s conversation metaphor, the software is failing to speak back—she is shouting into the wind.

#For information software in particular, all interaction specifies context. Thus, each interaction can and should result in a discernible change to a context-sensitive information graphic. Providing immediate feedback reduces the amount of manipulation the user must do before either reaching an adequate view or recognizing a wrong turn and backtracking.

#Any web form with a “submit” button or dialog box with an “accept” button fails this point. Google Maps offers reasonable feedback during relative navigation, but none during absolute navigation, such as typing in an address. Even a simple predictive auto-complete would be helpful, but consider the possibilities suggested by Ben Fry’s zipdecode applet. (First click “zoom” in the lower-right, then type in numbers.) Imagine honing in on familiar areas simply by typing the first few digits of a zip code—type “9” to immediately zoom into the US west coast, followed by “4” to zoom into the SF bay area and then “5” for the east bay. Because of the immediate feedback, the user can stop typing when she gets close enough, and use relative navigation from there.

How did we get here?

#Much current software is interaction-heavy and information-weak. I can think of a few reasons for this.

#First, our current UI paradigm was invented in a different technological era. The initial Macintosh, for example, had no network, no mass storage, and little inter-program communication. Thus, it knew little of its environment beyond the date and time, and memory was too precious to record significanthistory. Interaction was all it had, so that’s what its designers used. And because the computer didn’t have much to inform anyone of, most of the software at the time was manipulation software—magic versions of the typewriter, easel, and ledger-book. Twenty years and an internet explosion later, software has much more to say, but an inadequate language with which to say it.** Make no mistake, I revere GUIpioneers such as Alan Kay and Bill Atkinson, but they were inventing rules for a different game. Today, their windows and menus are like buggy whips on a car. (Although Alan Kay clearly foresaw today’s technological environment, even in the mid-’70s. See “A Simple Vision of the Future” in his fascinating Early History of Smalltalk (1993).)

#A second reason why modern software is dominated by mechanical metaphors is that, for the people who create software, the computer is a machine. The programmer lives in manipulation mode; she drives her computer as if it were a car. Thus, she inadvertently produces software that must be operated like a machine, even if it is used as a newspaper or book. Worse, the people who design platforms and GUI toolkits are even more prone to this perspective, since they work at a lower level. The application software designer is then almost forced into a mechanical model by the design environment.** Apple’s Interface Builder, for example, makes it simple to place buttons, sliders, and blocks of text. Dynamic graphics, the cornerstone of information software, must be tediously programmed with low-level constructs.

#Even software that starts out information-rich and interaction-simple tends to accumulate wasteful manipulation as features are added over successive versions. It’s easier on both the designer and the programmer to plug in another menu item and dialog box than to redesign a dynamic graphic, and sometimes it’s justified as a less jarring change for the user. After ten versions, the software can grow into a monstrosity, with the user spending more time pulling down menus than studying and learning information.

#Software doesn’t have to be this way, but the solution will require a significant re-thinking of both the design process and the engineering platforms. After a detailed case study of one recent design, I will discuss what’s needed to usher in the information software revolution.

Case study: Train schedules

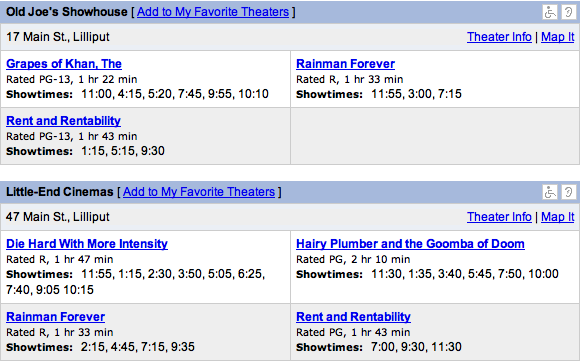

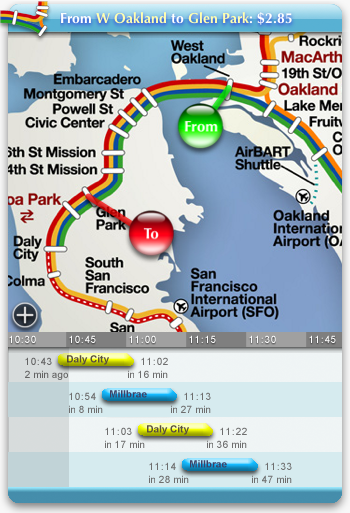

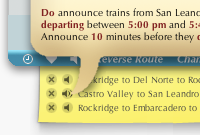

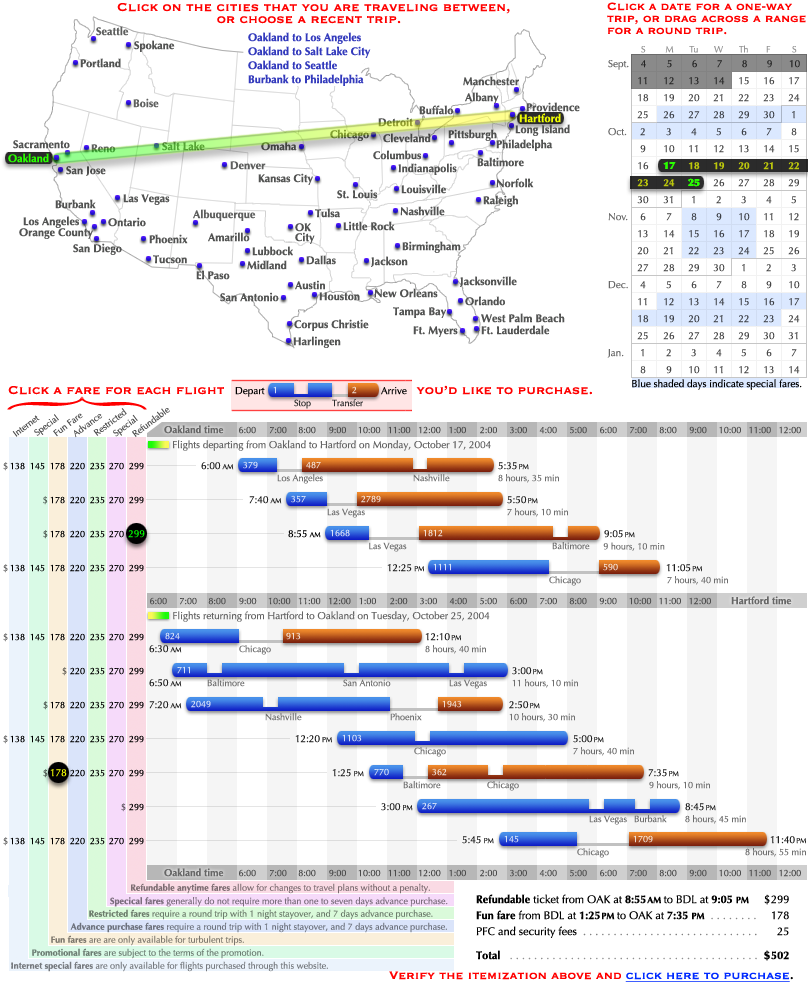

#I recently created a program for planning trips on BART, the San Francisco bay area subway system, in the form of a “Dashboard widget” (mini-application) for the Apple Macintosh.

#The design has clearly been successful. Even though the target audience is fairly small (SF bay area public transportation riders with the latest Mac OS and knowledge of how to customize it), user feedback has been wildly enthusiastic, and the widget received the 2007 Apple Design Award, as well as Macworld magazine’s rare perfect  rating. If you are unfamiliar with the widget, you can watch a one-minute demo movie:

rating. If you are unfamiliar with the widget, you can watch a one-minute demo movie:

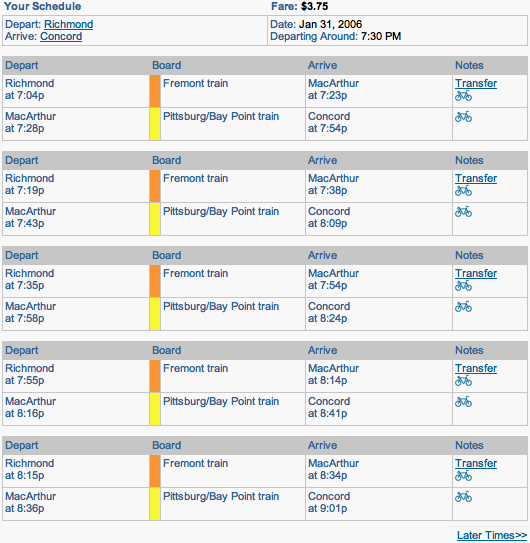

#As information software, the widget was approached primarily as a graphic design project. I will discuss how its design exemplifies the viewpoints in this paper, and also point out where it falls short and could be improved.** The widget originally inspired this paper, not vice-versa. Thus, the widget does not reflect new ideas conceived while writing this. (Yet!) I will also compare it to the trip planner on the official BART website, which follows the typical mechanical paradigm of drop-down menus, “submit” button, and table of results.

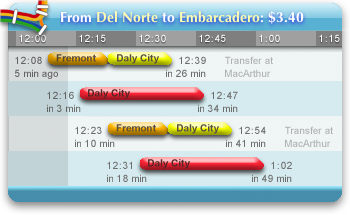

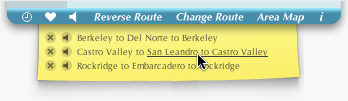

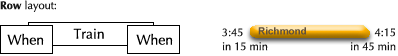

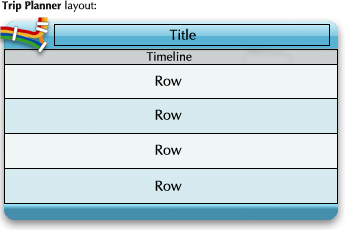

#The BART widget was designed around three classical forms of graphical communication: the timeline, the map, and the sentence.

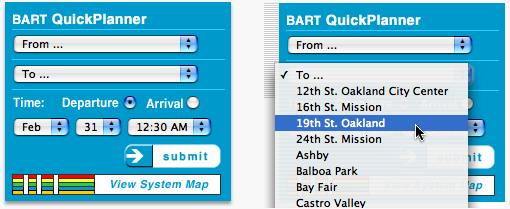

Showing the data

#Information software allows the user to ask and answer questions, make comparisons, and draw conclusions. In the case of trip planning, some questions are:

- When is the next train leaving? How long is that from now?

- When is that train arriving? How long is that from now?

- Which line is that train on?

- Does that trip have a transfer? If so, when, where, and for how long?

- What about the train after that? And after that?

- How frequently do the trains come?

- What about trains around 7:00 am on Tuesday?

#Users use the answers to compare the available trips, and draw a conclusion about which to take. Naturally, it must be possible for that conclusion to take the form of a plan: “Which train will I take? I will take the 7:32 train.” However, the plan then becomes a mental burden on the user. A good design would also allow for a series of quick boolean conclusions over time: “Should I start walking to the station now? No… no… no… okay, let’s go.”

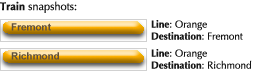

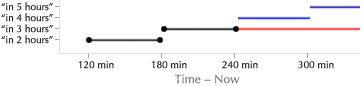

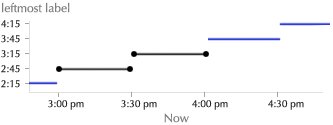

#The choice of graphical representation depends on what sort of data space is left after context-based winnowing. What context can be inferred?

#The user is expecting to leave around a particular time; thus, the graphic can exclude trips outside of some narrow time window. Furthermore, the most common time is “soon”; thus, the software can initially assume that the time window is “the near future.” Also, notice that all of the questions implicitly refer to a single route—a particular origin and destination pair. That is, the user wants to compare trips along the time dimension, but not the space dimensions. Thus, the graphic need only concern itself with a single route, which we last-value predict to be “the same as last time.”** A learning predictor for the route is presented later in the paper.

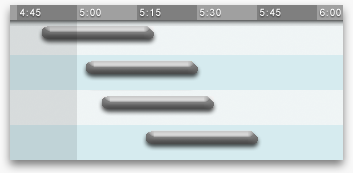

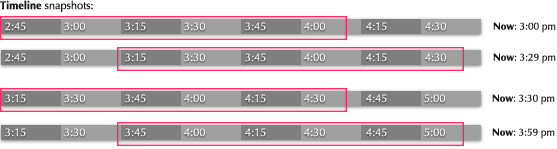

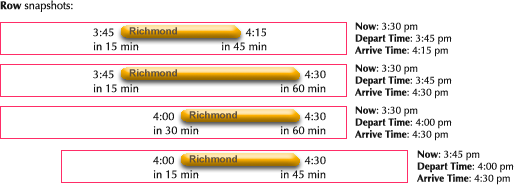

#After winnowing the data, we are left with a handful of trips—ordered, overlapping spans of time. We need a graphical construct that allows the viewer to compare the start, end, and length of each span. A natural choice is a time bar graph, which allows for important qualitative comparisons at a glance: When does each span start and end? How long is each span? How close together are they?The time bar graph may have been invented by proto-chemist Joseph Priestly in 1765 to compare thelifespans of various historical figures. Priestly’s chart inspired William Playfair to invent the modern statistical bar graph. Howard Wainer claims to have uncovered a bar graph from 3000 years earlier, plotting population changes in the tribes of Isreal after the exodus. See Graphic Discovery (2005), p18.

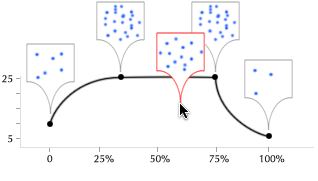

#The most important context, the current time, can be emphasized by shading the past. The most important data point, the next train, can be emphasized by keeping it in a constant location, the second row. This answers the most important qualitative questions: Is the next train coming soon? Did I just miss a train? For an experienced viewer, the conclusive question, “Should I start walking to the station now?”, can be answered literally at a glance.

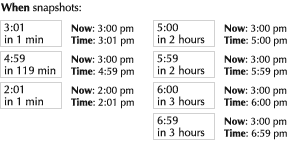

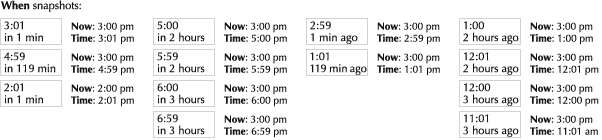

#The graphic can then be unobtrusively annotated with quantitative information, so closer inspection answers all of the questions precisely:

#Transfers can be regarded as segmentation of the overall trip. The question that must be answered exactly is where to transfer. The questions of when and how long should be answered qualitatively; the exact times would be irrelevant clutter.** A better design would probably place the transfer station name closer to the graphical representation of the transfer, instead of over to the side.

#And that’s about it. Although there clearly is more to the widget than this, most of the “user experience” is represented by the picture above. That is, this software is normally “used” by simplylooking at it, with no interaction whatsoever. In contradiction to the premise of interaction design, this software is at its best when acting non-interactively.

#Accordingly, all interactive mechanisms—the buttons and bookmarks list—are hidden when the mouse pointer is outside the widget. Unless the user deliberately wants to interact with it, the widget appears as a pure information graphic with no manipulative clutter.** Tufte uses the term “administrative debris.”

#Of course, if the predicted context is wrong, the user must interact to correct it. This involves navigation in the usual two dimensions, time and space.

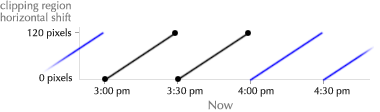

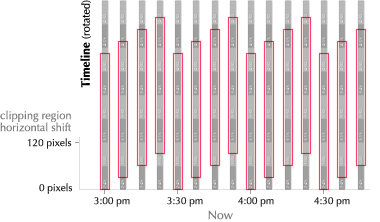

Navigating through time

#The widget initially assumes a time window of “the near future.”** This window changes over time, of course. The widget naturally stays in sync, always displaying relevant information. A button to manually “refresh” the display would be almost obscenely mechanical. There are two cases in which this context is incorrect:

- The user wants to see even later trips.

- The user wants to plan for some other time entirely.

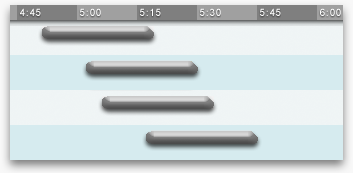

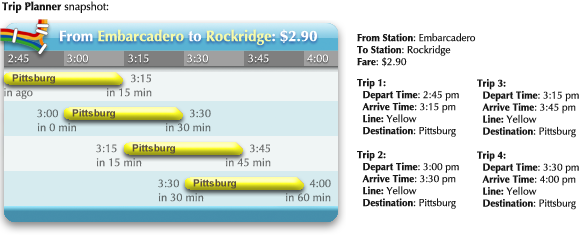

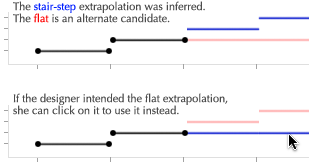

#Relative navigation. To see earlier or later trips, the user can simply drag the graphic around. A cursor change suggests this, as well as a brief message when the widget is first started.**  The mouse scrollwheel and keyboard arrow keys also serve to navigate through time. The “underlying” graphic is infinite—the user can scroll forever. Thus, a GUI scrollbar would be inappropriate.

The mouse scrollwheel and keyboard arrow keys also serve to navigate through time. The “underlying” graphic is infinite—the user can scroll forever. Thus, a GUI scrollbar would be inappropriate.

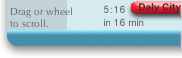

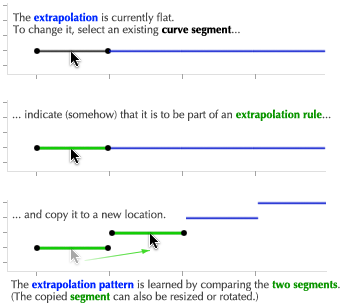

#Absolute navigation. To plan around an arbitrary time, the user clicks a button to reveal the hours of the day, from morning to night, laid out linearly. The user can then click anywhere on the mechanism to jump to that time.

![]()

#The mechanism’s labeling is intentionally vague, so the user will click approximately in the right area, and then continue to drag left or right until the correct information is displayed on the chart of train schedules. This forces the user to keep her eyes on the information graphic, instead of wasting effort precisely manipulating the navigation mechanism.** This is the same concept suggested by the Google Maps prediction list above. Instead of precise, tedious absolute navigation, offer quick ballpark navigation, followed by relative navigation in a tight feedback loop. Unlike the time of day, the predicted date (today) is probably close—few people plan subway trips weeks in advance. Thus, the date control is relative.

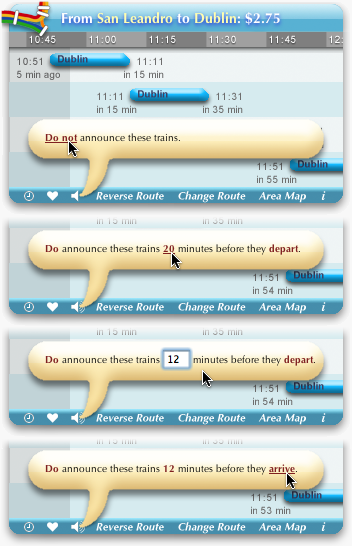

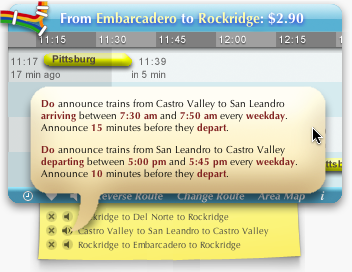

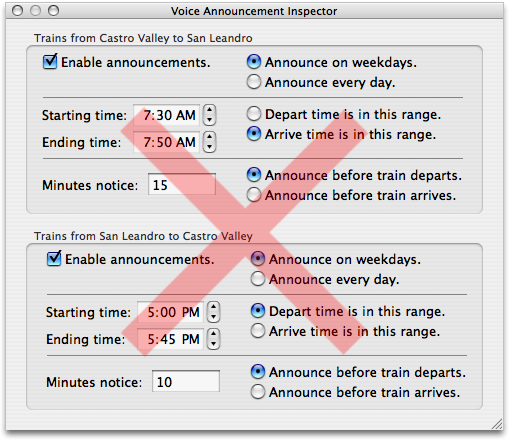

Navigating through space

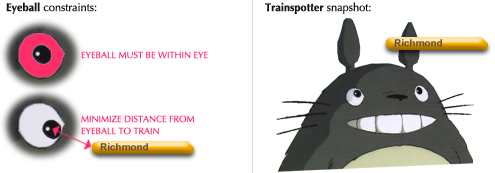

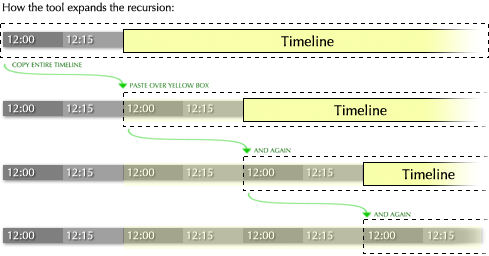

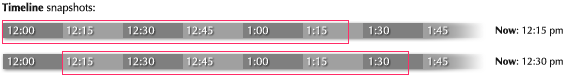

#The assumed context includes where the user is coming from and where she is going. The assumption is “the same as last time”; that is, this appears as explicit state. There are three cases for which the context is incorrect.